LLM Consumption

This feature allows users to track API calls and token consumption during customer interactions with an LLM-powered chatbot. It provides valuable insights to:

- Monitor usage patterns

- Support billing reconciliation

- Manage project costs effectively

Also, the consumption data will be populated once daily at Workspace & LLM level.

Key Highlights:

- Coverage: Workspace Training & AI Inference Calls

- Data Refresh: Once Daily

- Retention Period: 1 year

Here’s what you can do

- Check the total count of API calls & tokens consumed in widgets.

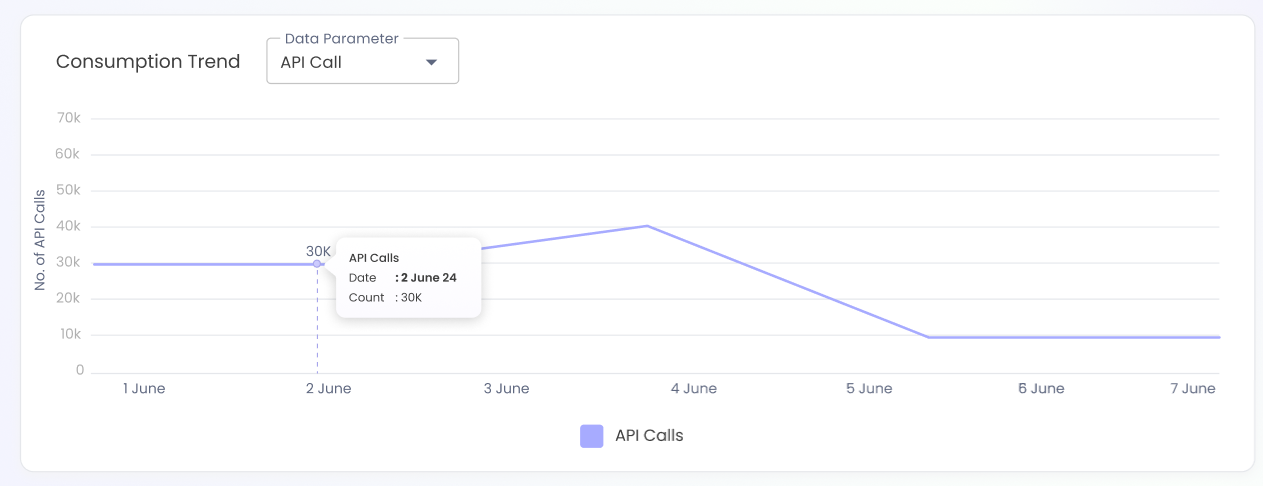

- Track the Consumption trend in the Line Chart: User can check the consumption trend in the line chart by applying a single select Data Parameter (API Call, Token) Filter. The Y-axis on the chart will display the Data parameter value against time periods on the X-axis, such as months, weeks, or days.

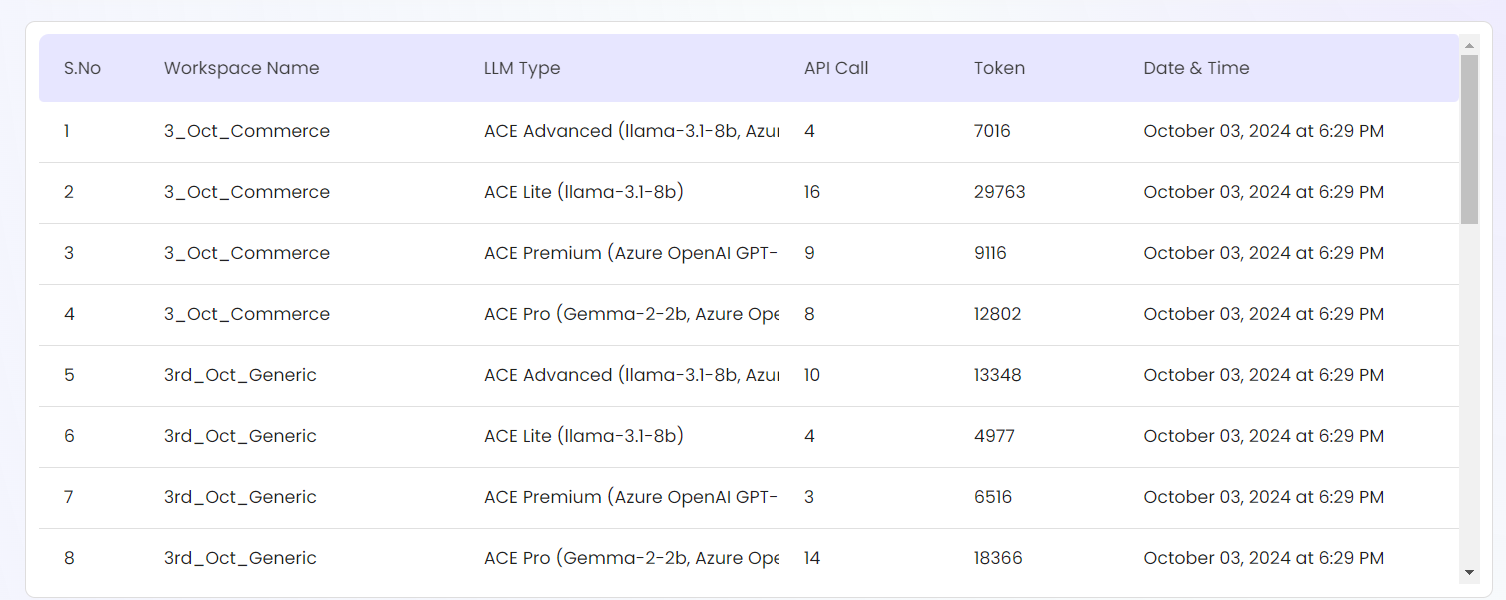

- View Workspace Level Consumption Info in Table: User can track the date-wise consumption at workspace level for each LLM in the table.

- Filter Data: User can select Workspace & LLM filter options (multi-select) & click on apply button to view the filtered data. Also, the consumption data for the last 1 year can be seen by applying the date range.

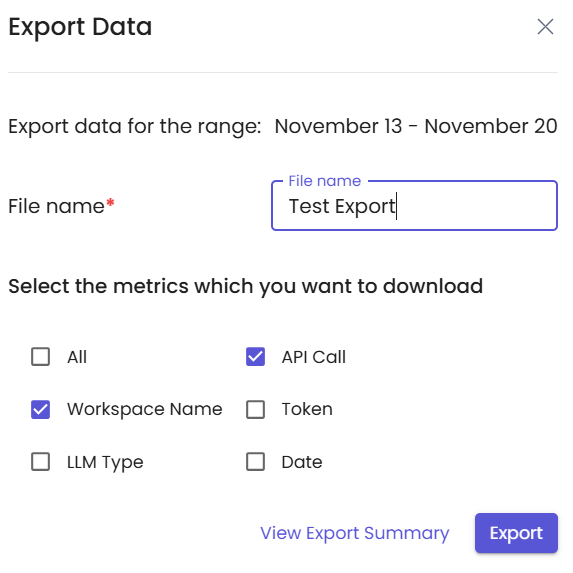

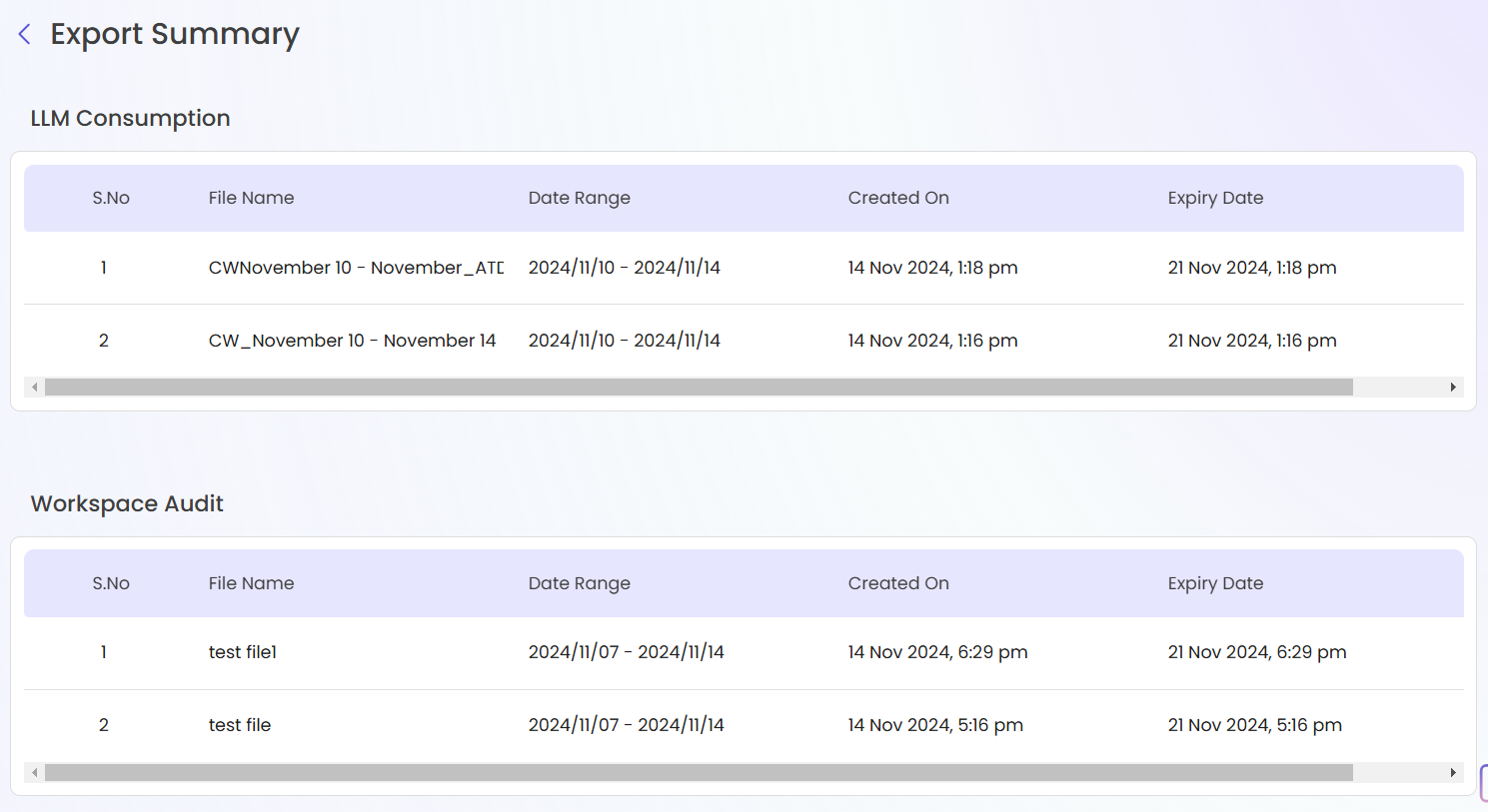

- Export Consumption Data: LLM consumption data can be exported in async way by clicking on export button present inside the LLM consumption tab. User can enter the file name and select relevant columns in a UI for async export. Previous exports of last 7 days are also visible in the export summary. Summary can be viewed by clicking on View Export Summary button present in the export UI.

Updated 10 months ago