AI Agent Guardrails (Developer Mode)

Introduction: This powerful feature allows you to define and enforce safety and content guidelines for your AI agents, ensuring they operate within your desired behavior and deliver safe and responsible outputs. Guardrails helps in strengthening Trust& Safetylayer of AI agents.

1. Understanding AI Agent Guardrails

Guardrails are essential for controlling the behavior of your AI agents. They act as automated checks that review content at two critical stages:

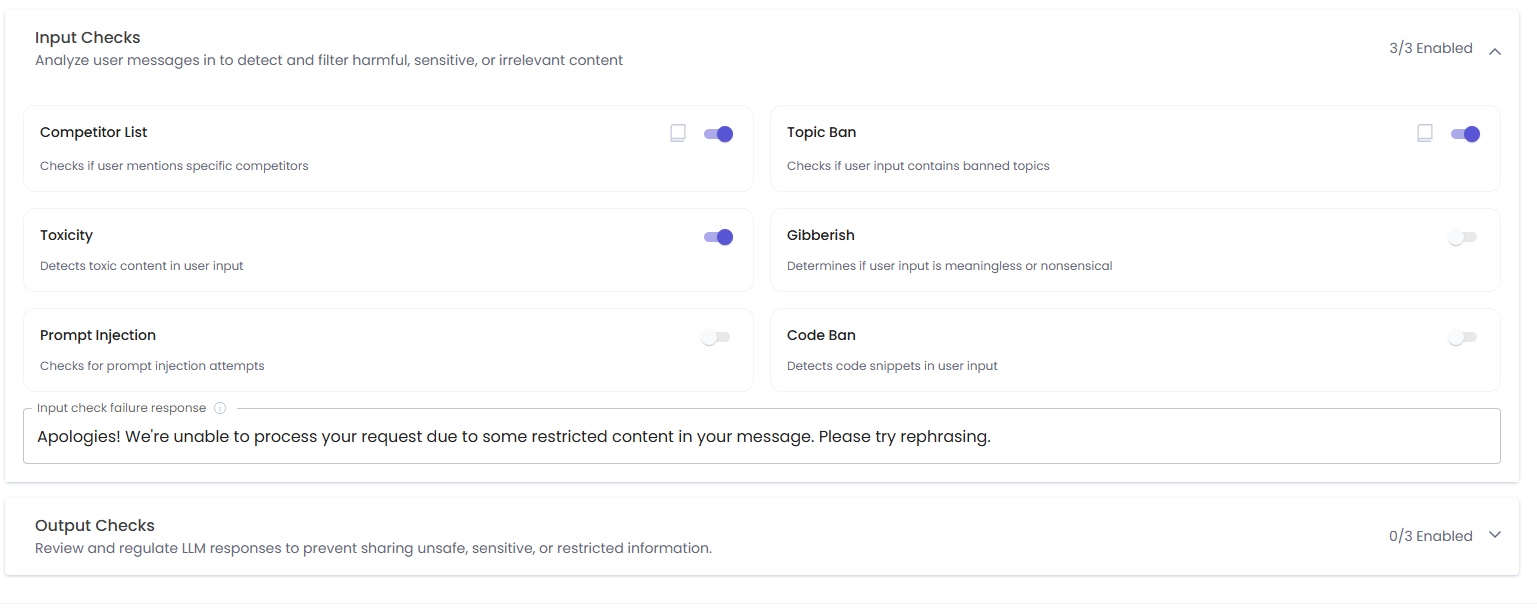

- Input Checks: These analyze the messages or queries that users send to your AI agent. Their purpose is to detect and filter out harmful, sensitive, or irrelevant content before your AI agent processes it.

- Output Checks: These scrutinize the responses generated by your AI agent (LLM output). They ensure that the AI's answers comply with your content and safety guidelines, preventing the sharing of unsafe, sensitive, or restricted information.

2. Accessing Guardrail Settings

Following guardrails are available in the Agent tab of workspace settings:

Input Checks (6) | Output Checks (4) |

|---|---|

|

|

Key Highlights:

-

Selection Limits on Guardrails: To ensure optimal latency and accuracy of your AI agent, there's a restriction on the number of guardrails that can be enabled. Max 3 input & 3 output checks can be enabled per agent. By default, all guardrails are in a disabled state. You can enable or disable them individually based on your project's needs using toggle.

-

Customized Fallback Responses: When an input or output check fails, your AI agent needs a default message to convey the issue to the user. You can define these mandatory standard fallback responses (max 200 characters) within the Guardrail settings. You'll find two separate text boxes: one for Input Check Failure and one for Output Check Failure.

-

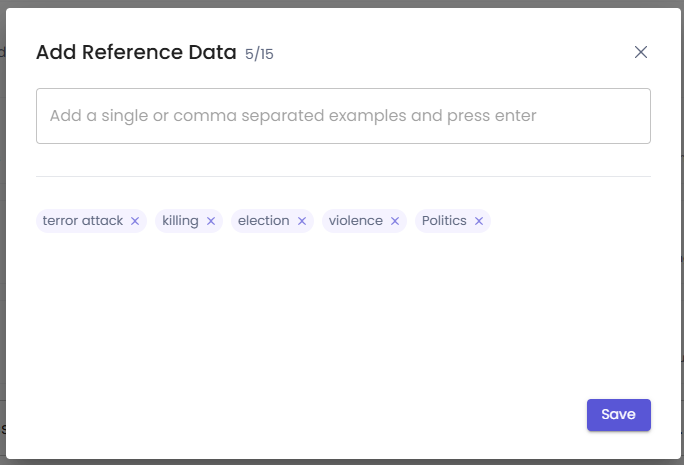

Reference Data Support: For some guardrails, like "Competitor List" and "Topic Ban," you can provide specific data that will be referred while checking the relevant context in input/output

How to Add Reference Data:

- For guardrails that support reference data (indicated by an icon next to the guardrail name), click on the icon.

- A modal will appear where you can add your reference examples.

- Type your example (e.g., a competitor's name, a banned topic) into the input field.

- Press Enter to add the example.

- Each added example will appear as a "chip" with an "X" icon. You can click the "X" to remove an example.

- Click "Save" once you have added all your examples.

Reference Data Guidelines:

- You can add a maximum of 15examples in reference data for each guardrail.

- Each example is limited to a maximum of 30characters.

Updated 8 months ago